Community

How to make your video and audio content accessible

From planning out your video layouts to avoiding flashing backgrounds, here are some pro tips for making your audio and video content accessible to everyone. We’ll also cover some best practices and common mistakes that you should avoid.

Claudio Luis Vera

Nov 30, 2021

As the content we publish becomes richer with video, audio, and other embedded media, you have an obligation to make sure that everyone is able to access, operate, and understand it. From the team at Stark, here are some of the best practices to implement — along with a few problem patterns you should stay away from.

Key considerations

When you’re publishing your content, you don’t want to be making any assumptions about your viewers and listeners’ abilities to see, hear, and work a pointing device that could exclude a large part of your audience. For that reason, you should provide more than one format for your content and more than one way to access it. This is what the Web Content Accessibility Guidelines (WCAG) are all about.

Here are the three most important use cases you should focus on:

- First, do you have alternative content for those in your audience that are unable to listen to your audio? It could be someone who’s d/Deaf, hard-of-hearing or someone who’s in a situation where the audio’s off, for example. These folks will rely on you to provide captions, subtitles, or transcripts as alternative content.

- Next, for those that are visually impaired or can’t see the video for some reason, do you have audio descriptions? Because if you're sharing a message through video, someone has to explain the parts with action and no dialogue!

- Finally, what about those folks in your audience who can’t use a mouse or a trackpad? Are you using a media player that can be operated with just a keyboard?

Everyone benefits from having alternative ways to experience audio and video content, especially with captions. In fact, most video content on the internet is watched without sound: that’s 69% web-wide according to Verizon Media, and as much as 85% on Facebook according to Digiday. And if you’re trying to search for content in a video, having transcripts can save you the pain of scrubbing through the whole thing.

Captions, subtitles, and transcripts

Many platforms and apps (like YouTube and Zoom) will use the terms captions, subtitles, and transcripts with different and overlapping meanings — creating no shortage of confusion. Here are the most generally accepted definitions for these forms of alternative text content:

Captions are blocks of text that are synchronized with the media content. They should be bite-sized, much shorter than a tweet in fact. Captions should not run more than 40 characters per line or more than two lines at once — or three lines occasionally in a pinch. Most media players display captions in the bottom third of the video, but the best players allow captions to be repositioned anywhere the user wants.

Automatic captions are generated in real-time through AI speech recognition, which is generally 90% accurate — but that remaining 10% can be disappointing to many users. Ever heard the term “Craptions”?

Conferences will often hire someone to do real-time transcription on a setup that’s similar to a court’s stenographer’s device, using a technology known as CART (Communication Access Realtime Translation). Some sophisticated captioning solutions will use a hybrid approach with automated captions that are corrected on the fly by a human CART operator.

Unless a person is looking directly at the video, it can be tricky to know exactly who is speaking. Whenever possible, you should try to use a captioning platform that identifies each change of speaker, a feature known as speaker attribution.

Subtitles are delivered in the same way as captions, but it usually refers to translations that are delivered with pre-recorded content. A video can have many options for subtitles in multiple languages.

Both captions and subtitles are very useful for watchers who don’t understand the language well, as well as for those who have difficulty concentrating on spoken words.

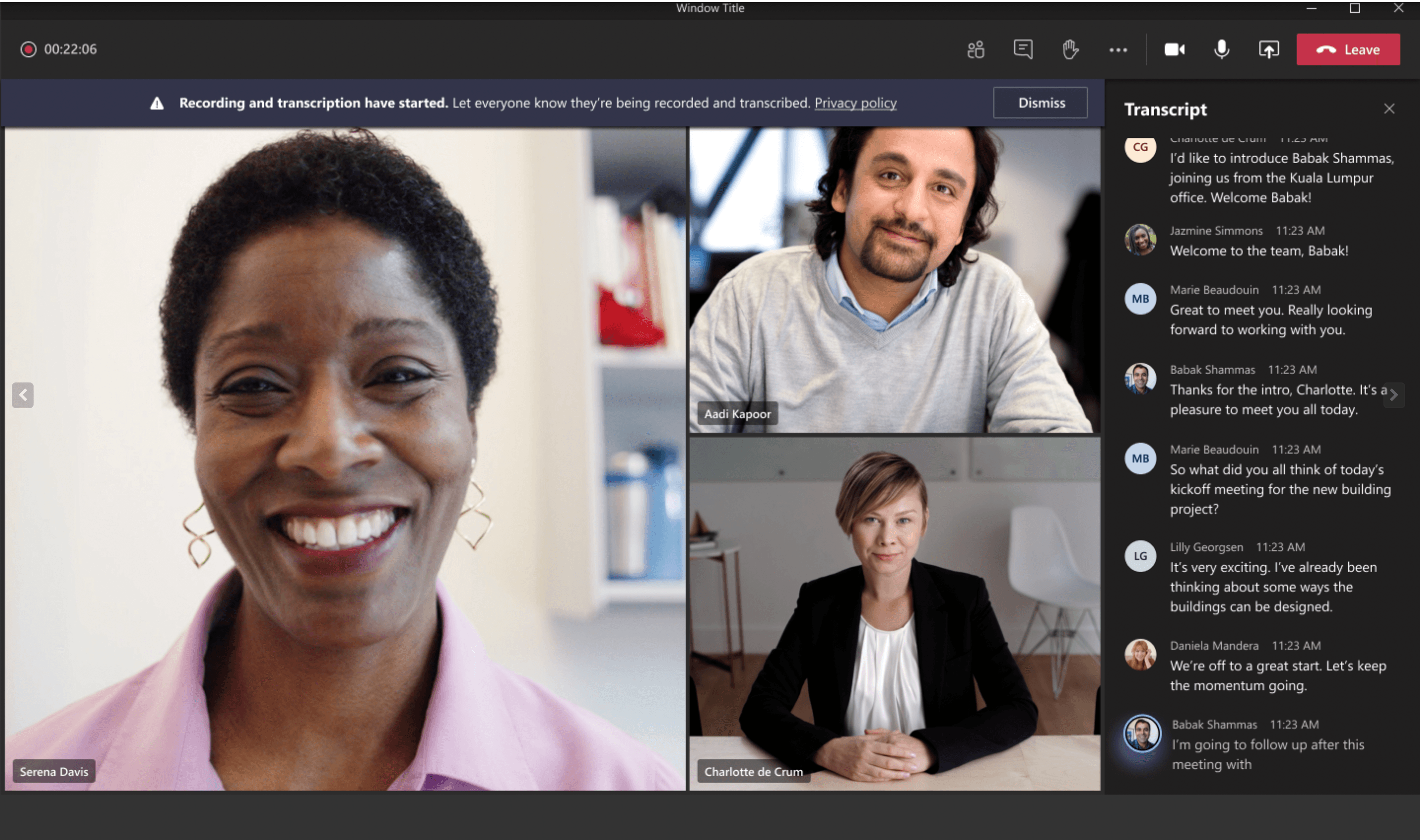

Transcripts are alternative text content for recorded media that’s typically presented in long-form as prose. A transcript can be a separate text file, although many platforms (such as Microsoft Stream) now present it side-by-side with the video. Transcripts can be indexed and searched like text content, making them very useful for SEO or any type of research.

It’s also worth noting that a web page can work as an alternative content in the same way as a transcript, so long as the text matches the script of the pre-recorded media.

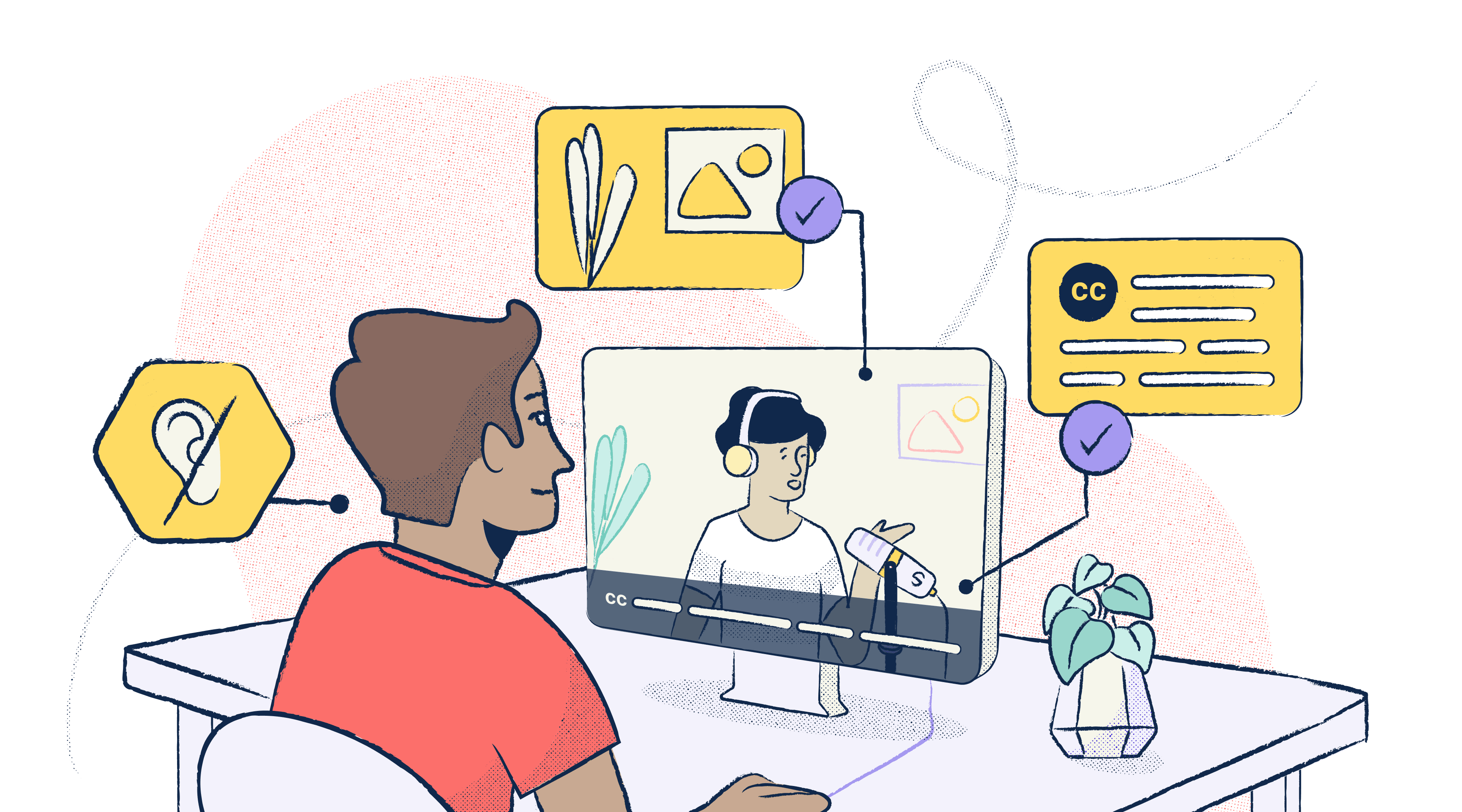

Planning your layouts

When designing a presentation’s layout, you’ll want to leave space for different accessibility features - both on the page and within the media player. Within your video player, the bottom third can be used for titles or a chyron, which may be covered over by captions. For context, a Chyron is the design banner notoriously used on TV news—showcasing the latest breaking news or the title of the segment.

If you’re sharing a presentation over a video conferencing platform like Zoom, the top right corner usually has a small inset video of the speaker. Even if it’s not placed there during the presentation, Zoom will automatically overlay the video in that corner.

If you have a video of a sign language interpreter, the convention is to use the bottom right corner for the interpreter. Other platforms use the bottom left for inset speaker videos. With all these considerations, use the middle and top left of the video frame for over-the-shoulder graphics so they’re not covered by captions or video insets.

If you’re laying out a page to contain video, side-by-side captions are preferable to captions within the video frame. Often they are to the right of the video frame in the form of a transcript that is synchronized to the video. An older approach places the transcript within an expandable text element below the video frame, but users may need to scroll or expand the element to even know that it’s there.

Patterns to avoid

Background video

Especially if there’s no way to stop it, a background video can distract the user or, worse, cause them motion sickness. If you must use background video, don’t have a lot of motion, flashes, or quick cuts. Use something understated or relaxing instead, like ripples on the water or passing clouds.

Videos of animated text

There is an entire category of videos that have text animations and no voiceover. Sometimes they may have a soft music track as well. To someone who is visually impaired, the video is just a clip of the background music or blank audio — unless audio descriptions are added.

Self-playing videos

A video that just starts playing is a nuisance to almost anyone using the internet, especially when it involves loud audio. But to a person with a vestibular disorder, videos that play on their own or can’t be stopped can trigger motion sickness as well.

Accessibility guidelines require a control for the user to pause, stop, or hide the video if it lasts for more than 5 seconds. But keep in mind that some background videos can evoke nausea in far less than 5 seconds, so it’s best to include controls regardless of how long your video lasts.

Flashes more than 3 times a second

In 1997, an episode of Pokemon was aired with flashing backgrounds, triggering photosensitive epileptic seizures in viewers across Japan. Nearly 700 children were hospitalized after viewing backgrounds that flashed at 12 Hz for about 6 seconds.

WCAG Accessibility guidelines recommend not using flashes above the size of a mobile screen at arm’s length, for any longer than 2 seconds, and any faster than 3 times a second.

Taking this further

If you’re looking to up your accessibility game, there are a few great resources out there to learn from. The BBC has perhaps the most in-depth reference for subtitles and captions in the world, and it’s an excellent benchmark even for seasoned accessibility professionals.

If you’re hosting a meeting or a live event, it’s really important that you plan for services like live captions, CART, and sign language interpreters well in advance. If you create a lot of video content, quality captions and transcripts should be as much a part of your workflow as video editing or voiceover.

As you can see, poor choices and sloppy practices can cause a large segment of your audience to miss out on your content. Or worse: your content can actually trigger nausea or an epileptic seizure.

By making your content accessible, you’re not only benefiting those who may have disabilities but your entire audience as well.

Want to join a community of other designers, developers, and product managers to share, learn, and talk shop around all things accessibility? Join our Slack community, and follow us on Twitter and Instagram.